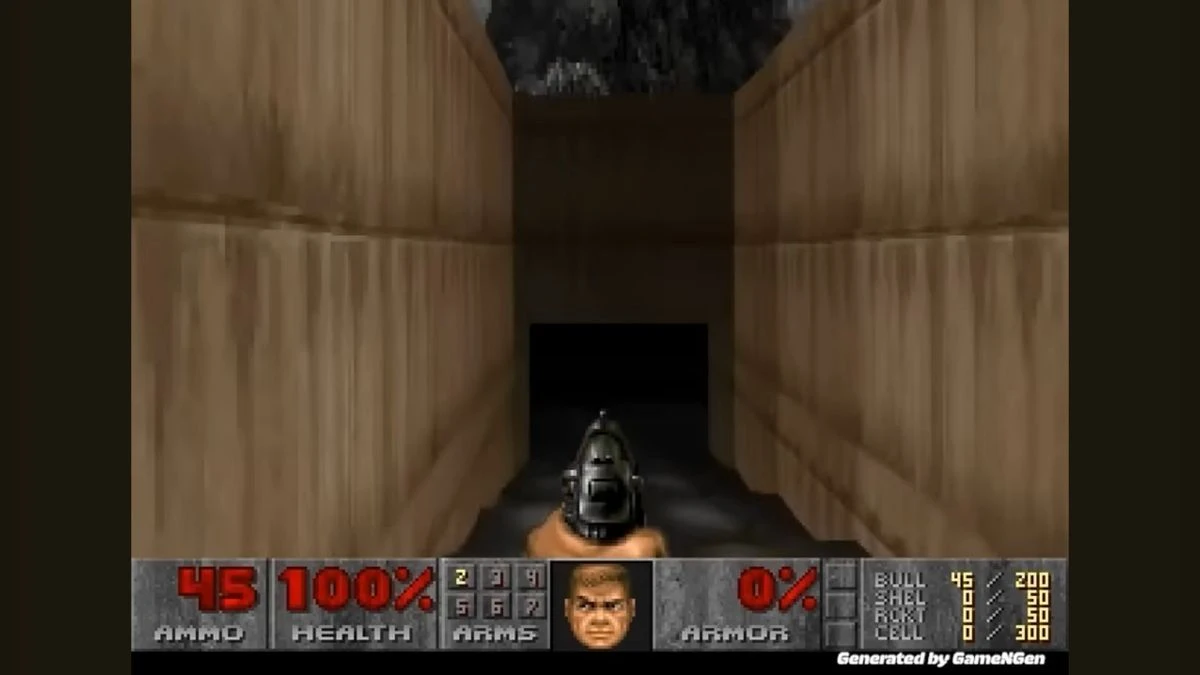

It's pretty trippy. A neural network is generating frames by frame by 'playing Doom'.

"But can it run Doom?" This adage has been able to travel across almost all of the tech available. It has almost become a benchmark for geeky creativity. From running Doom on a pregnant test to controlling the game with toaster, it's been everywhere.

Researchers from Google Research, Google DeepMind and Tel Aviv University have managed to run the classic shooter using nothing but a neuronal network (via Futurism). This generates a frame based on a trained model. Here is a video showing it in real-time, but it has some limitations.

A neural network is a structure of AI that is modeled after the brain. It uses machine learning for processing commands and prompts. They are used in predictive models due to their ability grasp concepts more broadly.

Iterating on a small scale, using large sets of data is a big part of improving them. When a network "trains" on something, it means it pulls in data from that thing and uses it in some way. In the case of generative AI models, they will be very similar to their original material until data sets are large enough.

It's impressive that a neural network can run such a complex game. However, the video is played at a very slow pace, with many cuts, capturing the most fluid and real moments of the game. This is not to minimize the work, but to put it in context. You can't just go out and play Doom with the help of a neural net right now. It's a test, and not much else.

The accompanying paper acknowledges that the experiment has limitations, and argues for the future of this technology. The neural network has a limited amount of memory and can only store three seconds from the actual game. It seems to retain HUD effects, but memory positioning and other information is lost during play. As you can see from the video, it also fails to predict following frames. There are visual glitches, and some areas lack clarity.

The paper continues by saying "We note that our technique is not Doom-specific except for the reward functionality for the RL agent".

The article goes on to say that the same basic network can be used to try to emulate other games. It then goes on to say that the same engine could be used as a replacement or an assistant for programmers who are working on actual games. The network is trained on specific games and functions. No argument is made as to how this could translate into creating new games. The paper says that a similar engine could be used to "include guarantees on frame rate and memory footprints" and then adds "We haven't experimented yet and much more research is required, but we are eager to try!"

Although it's unclear how well the network will work beyond what we've seen so far, I think that the technology is impressive, even if the goals for the future of paper seem a little lofty.

Comments